dexterous interaction lab

靈巧互動實驗室

dexterous: done with mental or physical skill, quickness, or grace (Merriam-Webster)

From using chopsticks to manufacturing, humans skillfully perform a variety of tasks; yet we mostly only tap on screens when interacting with modern computers like our smartphones. Our lab envisions future Human-Computer Interaction (HCI) that can leverage and even augment our dexterity.

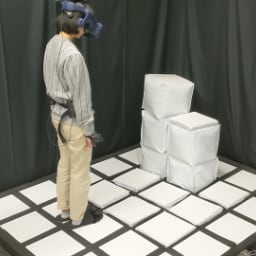

One key to human dexterity is through technologies for haptic feedback. Humans rely on a combination of senses, including precise sense of touch and forces, with muscular control to perform tasks. While most advanced haptic devices developed for teleoperation often require huge infrastructure, our lab brings the rich senses of haptics to wearables, Augmented Reality (AR), and assistive technology. Our lab is interested in investigating novel haptic feedback that goes beyond simple vibration for notification, such as providing the sense of touch for assisting in repairing while wearing miniature fingernail devices, guiding hand poses for playing musical instruments with a robotic exoskeleton, providing a new tactile sensory substitution for Blind users in grasping everyday objects. Our lab believes enabling dexterous interactions in computing can enhance daily experiences and empower humans both physically and cognitively.

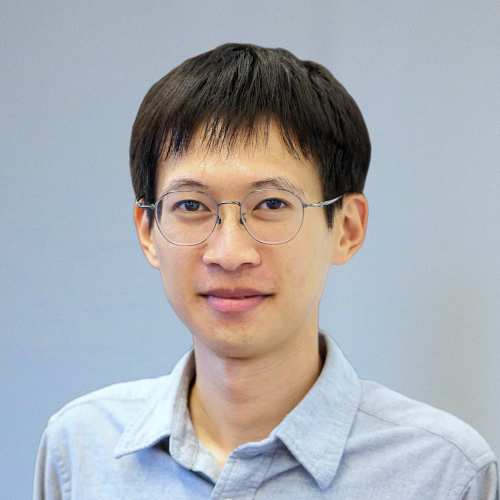

Dexterous Interaction Lab (DexLab) is a newly established research lab led by Prof. Shan-Yuan Teng (PhD, University of Chicago) of the Department of Computer Science & Information Engineering at National Taiwan University (NTU), Taipei, Taiwan.

Research themes

Our lab aims to explore what interactive devices will look like, feel like, and live with like, much further into the future (e.g., 10, 20, 50 years from now). Specifically, our lab digs into these themes by developing/inventing novel software-hardware systems (e.g., wearables) and conducting in-depth user studies (e.g., perception, user experience):

- How might we design interactive systems that increase user’s dexterity? While AI systems have advanced in text and video domains, there is little assistance for physical tasks (repair, craft, cooking, sports, etc). How might we design systems that allow users to perform better, and even learn better?

- How might we design multisensory systems in the wild? Most multisensory systems are restricted in specific labs (theme parks, simulators); how might we enable richer experiences that integrate into our daily mobile life?

- How might we design devices for more people? Our bodies are all different; how can we design systems that support individual needs, such as assistive tools for people with disabilities?

Apply to the lab

The lab is recruiting students, including undergrads (專題生), Master's/PhD students (資工所、網媒所皆可). See Apply to the lab.

Principal investigator

Shan-Yuan Teng [CV]

Assistant Professor

鄧善元 助理教授

資訊工程學系暨研究所 | 資訊網路與多媒體研究所

tengshanyuan@csie.ntu.edu.tw

*Shan-Yuan is the first name; Email in English or Chinese (Traditional)

News

- Shan-Yuan wrote AI policy for the lab.

- The lab space renovation is done (with bean bags)! See lab hacks for a glimpse of fun.

Teaching

- Spring, 2026 CSIE7641: Multimodal Human-Computer Interaction (graduate)

- Fall, 2025 CSIE5647: Making and Inventing Interactive Devices (undergrad)

Lab visit

Please contact Shan-Yuan for a visit. We are in CSIE Building (Google Maps), Room 344 (Dexterous Interaction Lab), No. 1, Section 4, Roosevelt Rd, Da’an District, Taipei City, Taiwan 10617

Supported by

Ministry of Education, Taiwan

Ministry of Education, Taiwan

Yushan Young Fellowship

玉山青年學者